In this article, we’ll look at how vehicle perception sensors are driving automation in ADAS Applications.

We’ll also consider some of the challenges that lie ahead.

Ongoing advances in sensor technology are integral to the goal of increasing road safety. How? ADAS features are built on data from sensors. They use it to warn drivers of safety risks as well as intervene to prevent accidents. Some vehicles combine ADAS applications with the infotainment system to provide drivers with a view of their surroundings.

ADAS relies on embedded systems throughout the vehicle. Cameras, radio detection and ranging (RADAR), light detection and ranging (LiDAR), and ultrasonic transducers all have roles in gathering the data. Global Navigation System Satellite (GNSS) and Inertial Measurement Units (IMUs) provide additional inputs. The challenge is to interpret the data in real-time from embedded vision to enable the system to intervene.

To that end, modern ADAS applications needs higher computing power and memory to process the multiple data streams. New technologies have developed in the past year to advance state-of-the-art vehicle perception. These systems use GPU, FPGA or custom ASIC within the electronic control units (ECUs) to efficiently manipulate computer graphics and image processing and handle large volumes of data in real time.

ECUs are the brain controlling automotive sensor fusion. In what way? The ADAS controller fuses data from the different sensors to make decisions. Information-based systems use different cameras to alert the driver to hazards with blind-spot, lane departure, and collision warnings, while radar and other sensors at different levels help map the environment and take action.

The Society of Automotive Engineers (SAE) standards document, SAE J3016, is a good reference that defines the levels of driving automation systems for on-road motor vehicles. The levels of driving automation are defined based on the specific role played by each of the three main performance actors: the user (human), the driving automation system, and other vehicle systems and components. Levels 1, 2 and 3 require the presence of a human driver. At these levels, the human driver and the car’s automation system are expected to work hand in hand while driving. Levels 4 and 5 refer to true self-driving cars with artificial intelligence (AI) and no human assistance required while driving.

The latest generation of vehicles is moving beyond Level 0-2 autonomy that provides driver support, including warnings and brief assistance like blind spot warning, automatic emergency braking as well as lane centering and adaptive cruise control.

Levels 3-4 advance to automated driving features, which can take control of the vehicle under specific conditions. ECUs use embedded vision to enable vehicles to make safety decisions instantly. How? Input from sensors, maps, and real-time traffic data allows ECUs to anticipate road issues ahead.

With more sensors built into the vehicle, the system can combine historical and real-time data about road conditions. This allows it to not only inform the driver but to act. Deep learning computer vision algorithms are used to train neural network models. These models are trained and refined continually using road scenes and simulators. Real-time inference uses these models in real-time to detect, classify and track objects like bikes and pedestrians in the vehicle’s field of view. The system then has enough information about the driver and its surroundings to take control, whether to avoid a hazard or simply provide automatic parking.

As vehicles already benefit from a wide variety of 4G-enabled services for the driver and passengers, driver assistance systems can also be seen as a gateway to fully autonomous driving with the implementation of 5G and real-time connectivity to complement advanced on-board sensors (RADAR, LIDAR, cameras).

There is still work to be done to achieve full autonomy and the implementation of a Level 5 self-driving car. Changes in sensor positioning and integration will be incremental as these technologies advance and costs decrease.

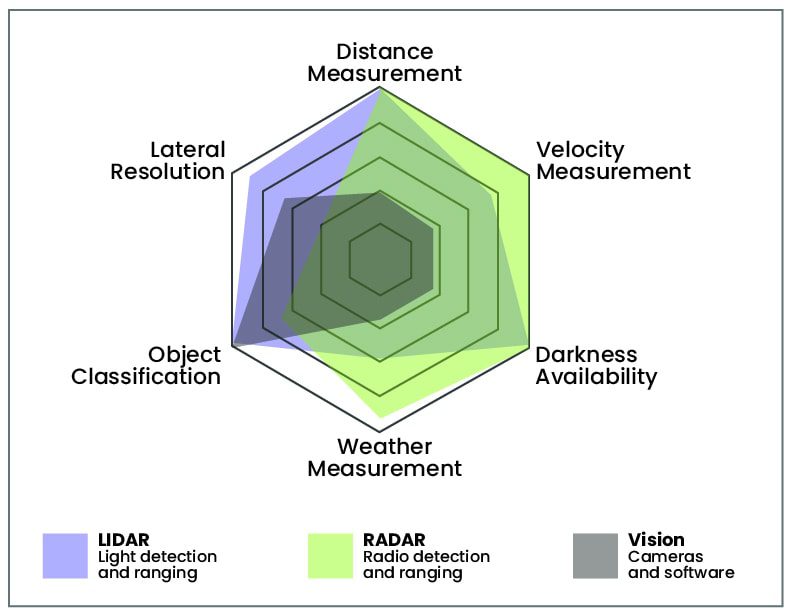

The vehicles we can buy today are becoming smarter and safer and are equipped with the three main types of sensors – cameras, RADAR or LiDAR. With these sensors coupled with a powerful computer system, the vehicle can map, understand, and navigate the environment around it safely. Every sensor has their pros and cons. What are the fundamental differences between these three types of sensors? Let’s take a look.

Although the cameras detect Red Green Blue (RGB) information and offer extremely high resolution, they lack depth information, speed perception and can be blinded by sunlight. They also require significantly more computing power and must be combined with radar and lidar sensors as a complementary technology to optimize the analysis of all data.

Commonly also associated with cameras, RADAR can help reduce the number of video frames needed for the vehicle to detect the hazard and respond. It offers advantages in speed and object detection, as well as perception around objects and the ability to operate in bad weather. But it does not have enough precision to identify whether an object is a pedestrian, car, or another object.

Placed throughout the vehicle, LiDAR complements the cameras and radar to provide 360˚ coverage and the ability to achieve very fine and accurate detection of objects in space. But LiDAR does not have resolution comparable to that of a 2D camera, and has limitations in poor weather conditions. In addition, it does not detect colors or interpret text to identify, for example, traffic lights or signs. Cost is also an issue for the integration of LiDAR solutions to advanced driver assistance systems. The emergence of efficient and innovative solutions recently has paved the way for affordable solutions to bring high resolution 3D imaging to the automotive industry.

Fundamental differences between LIDAR, RADAR and VISION

Beyond cameras, RADAR, and LiDAR, other systems play an important role that can help address the ADAS safety development challenges. A fully autonomous vehicle must have an accurate localization solution. Ground data, mobile mapping, and accurate real-time positioning are essential. High-precision GNSS technology, provides the accuracy, availability, and reliability, that a vehicle requires to be self-driving.

The combination of technology such as: RADAR, LiDAR and cameras with GNSS solutions are certainly the best way to deliver the positioning performance required by Level 4 and 5 autonomous vehicles.

Most vehicles produced in the world are equipped with some level of driving automation and ADAS is one of the fastest growing segments of automotive electronics. To remain competitive, development teams must have the experience to deliver high-quality solutions with minimal risk, while understanding sensor technologies so that the vehicle can fully respond to its environment and provide an enhanced user experience.

At Orthogone we have the expertise that can help to develop a safe and smart vehicle.

We partner with automotive manufacturers and suppliers to design high-performance systems and we understand the growing challenge around the use of embedded systems in ADAS.

Contact us today to learn how you can partner with Orthogone for your automotive embedded systems project.